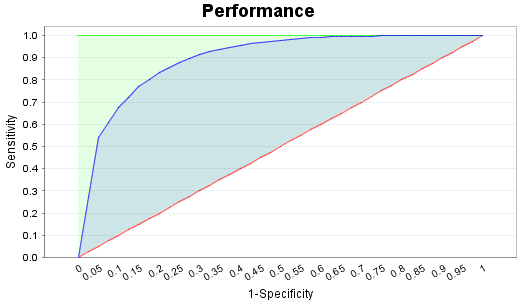

Area Under the ROC Curve (AUC)

Rank-based measure of predictive model performance.

The Area Under the ROC Curve (AUC) is another way to measure predictive model performance. It calculates the area under the Receiver Operating Characteristic (ROC) curve. The AUC is linked to Predictive Power (PP) according to the following formula: PP = 2 * AUC - 1. For a simple scoring predictive model with a binary target, this represents the probability that a randomly chosen signal observation will have a higher score than a randomly chosen negative observation (non-signal).

One of the interests of the Area Under the ROC Curve is its independence from the target distribution. For example, even if you duplicate each positive observation twice by duplicating rows in the dataset, the AUC of the predictive model stays the same.

Sensitivity, which appears on the Y axis, is the proportion of CORRECTLY identified signals (true positives) found (out of all true positives on the validation data source).

[1 – Specificity], which appears on the X axis, is the proportion of INCORRECT assignments to the signal class (false positives) incurred (out of all false positives on the validation data source). (Specificity, as opposed to [1 – specificity], is the proportion of CORRECT assignments to the class of NON-SIGNALS – true negatives.)