System Sizing, Tuning, and Limits

Here are some guidelines on sizing and tuning your SAP Analytics Cloud system, and limits for acquiring data.

- Data Integration Limits

- File Upload Limits

- Limits when Importing and Exporting Content as Files

- System Sizing and Tuning

Data Integration Limits for Models, Datasets, and Stories

Data integration consists of data acquisition and data preparation workflows. Each workflow has its own set of limits and restrictions. Depending on the data sources, content types, and actions, these workflows may be:

- Two independent steps (for example, when invoking the data acquisition workflow to import draft data, then at a later time invoking the data preparation workflow to create a model from draft data).

- One integrated step (for example, when scheduling a model refresh or refreshing a model manually).

- Just a data acquisition workflow (for example, acquiring data from a Business Planning and Consolidation data source, or importing data to a Dataset).

Data Acquisition: File size limits

- Microsoft Excel (XLSX only): Maximum file size: 200 MB.

- Comma-separated values files (CSV): Maximum file size: 2 GB.

- Excel files (XLSX only) in cloud storage through Cloud Connetors: Maximum file size: 200 MB.

- CSV files in cloud storage through Cloud Connectors: Maximum file size: 2 GB.

Data Acquisition: row, column, and cell limits

- Classic account models and stories (SAP Data Center and Non-SAP Data Center Platform), and

New model (SAP Data Center):

- For SAP BW, SAP Universe, SAP HANA, Google BigQuery, and SQL data sources only: 100,000,000 cells; 100 columns.

- For CSV and XLSX files, there is a limit on file size and a maximum of 2,000,000,000 rows; 100 columns.

- Google Sheets allows a maximum of 5 million cells (but CSV and XLSX files stored in Google Drive follow the above 2,000,000,000 row, 100 column limit).

- The SAP Concur API has an 800,000 row limit per model refresh.

- For all other data sources: 800,000 rows; 100 columns.

- The row restriction doesn't apply when importing data from all versions of SAP Business Planning and Consolidation (BPC).

- New model type (Non-SAP Data Center Platform):

- For SAP BW, SAP HANA, Google BigQuery, and SQL data sources only: 1,000,000,000 cells; 1000 columns.

- For CSV and XLSX files, there is a limit on file size and a maximum of 2,000,000,000 rows; 1000 columns.

- Google Sheets allows a maximum of 5 million cells (but CSV and XLSX files stored in Google Drive follow the above 2,000,000,000 row, 1000 column limit).

- For all other data sources: 10,000,000 rows; 1000 columns.

- Datasets (SAP Data Center and Non-SAP Data Center):

- For SAP BW, SAP HANA, Google BigQuery, and SQL data sources only: 1,000,000,000 cells; 1000 columns.

- For CSV and XLSX files, there is a limit on file size and a maximum of 2,000,000,000 rows; 1000 columns.

- Google Sheets allows a maximum of 5 million cells (but CSV and XLSX files stored in Google Drive follow the above 2,000,000,000 row, 1000 column limit).

- For all other data sources: 1,000,000 rows; 1000 columns.

CautionWhile applying the predictive model to an application dataset, Smart Predict generates additional columns. The application process can get blocked if your application dataset already risks crossing the limit of 1,000 columns.

- The maximum number of characters in a cell is 4998.

- Each tenant can have a maximum of 30 concurrent data management jobs (including both import and export jobs). Additional submitted jobs will be queued for 50 minutes then will timeout with error message either ‘the job has timed out because there were too many queued jobs’ or ‘the data acquisition job timed out in the queue.’ This is true no matter which type of SAP Analytics Cloud tenant you have, whether it is a private dedicated tenant or shared.

- Each import or export data management job has a maximum 24 hours run time. Jobs will terminate when they reach the time limit.

Data Preparation/Modeling: Row limits

(These are limits related to the fact table)

Row limits determining row counts:

The number of rows in a fact table is not the same as the number of data points in the dimension. The following examples illustrate how imported data can sum up to multiple aggregated arrangements, or rows of fact data:

|

2 rows of imported data become 4 rows in the fact table:

|

4 rows of imported data become 4 rows in the fact table:

|

- Data preparation row limit: The row limit is determined by the data acquisition limit. However, each data preparation job will time out if the browser times out. You can increase the session timeout setting if necessary. See System Sizing and Tuning below.

- Modeling row limit:

- Subsequent data imports to an existing model cannot exceed a total of 2^31-1 (2,147,483,647) rows.

- You cannot import data or schedule a data import into an existing model if the resulting fact data would include over 2^31-1 (2,147,483,647) rows.

Data Preparation: General limits

- Analytic models: if there are more than 1,000,000 unique members, the dimension will be made read-only

Data Preparation/Modeling: General limits

(These are limits related to the master data)

- Columns:

- Models, and stories with embedded models (that is, when adding data into a story): 100 columns

- Dimension members: 1,000,000

- Dimension members with geo enrichment in the classic account model data preparation step: 200,000

- Dimension members with parent/child hierarchy: 150,000 (for other kinds of attributes, the 1,000,000 limit applies)

- The maximum length of imported data values is 256 characters.

File Upload Limits

The maximum file size when uploading files on the Files page is 100 MB.

Limits when Importing and Exporting Content as Files

The maximum file size when importing and exporting content as files in the Deployment area is 100 MB.

System Sizing and Tuning

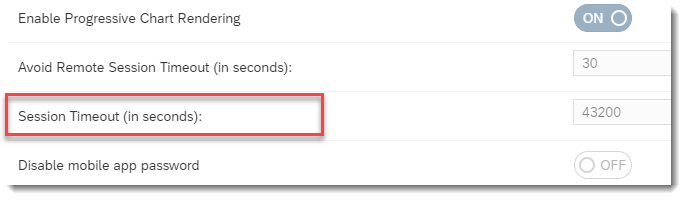

Session Timeout setting

Users need to maintain an active browser session during data import and processing (not including scheduled refreshes).

But if users often encounter session timeouts during large imports, a system administrator can increase the session timeout value: .

SAP Analytics Cloud Agent

For better handling of large data volumes, please ensure that Apache Tomcat has at least 4 GB of memory.

Data source system tuning

If you plan to import large amounts of data, make sure your data source system is tuned accordingly. Refer to the documentation from your data source vendor for information about server tuning.

Private Options

If you subscribe to a private option, the default instance of SAP Analytics Cloud starts with 128 GB HANA memory.

You may upgrade the default private instance by subscribing to SAP Analytics Cloud HANA upgrades for additional CPU, memory, disk space, and bandwidth. The memory capacity is counted in blocks of memory per month (e.g. 128 GB, 256 GB, 512 GB, etc.) indicated in the tables below for the respective deployment environments.

The specification tables for the different SAP Analytics Cloud private options are based on the underlying hyperscaler (AWS, Azure, or AliCloud) instance types deployed.

The Different Private Options

| Size | 128 GB | 256 GB | 512 GB | 1 TB |

|---|---|---|---|---|

| Cores | 24 | 32 | 40 | 80 |

| Memory | 128 GB | 256 GB | 512 GB | 1 TB |

| Disk Space | 1280 GB | 2560 GB | 5120 GB | 10240 GB |

| Bandwidth | 512 GB | 1 TB | 1 TB | 1 TB |

| Size | 128 GB | 256 GB | 512 GB | 1 TB | 2 TB |

|---|---|---|---|---|---|

| Cores | 16 | 32 | 64 | 64 | 128 |

| Memory | 122 GiB | 244 GiB | 488 GiB | 976 GiB | 1952 GiB |

| Disk Space | 1425 GB | 1425 GB | 1425 GB | 1425 GB | 1425 GB |

| Bandwidth | 10000 Mbps | 10000 Mbps | 25000 Mbps | 10000 Mbps | 25000 Mbps |

| Size | 128 GB | 256 GB | 512 GB | 1 TB | 2 TB |

|---|---|---|---|---|---|

| Cores | 20 | 32 | 64 | 64 | 128 |

| Memory | 160 GiB | 256 GiB | 512 GiB | 1024 GiB | 2000 GiB |

| Disk Space | 1280 GB | 1280 GB | 1536 GB | 2944 GB | 3968 GB |

| Bandwidth | 16000 Mbps | 8000 Mbps | 16000 Mbps | 16000 Mbps | 30000 Mbps |

| Size | 128 GB | 256 GB | 512 GB | 1 TB | 2 TB |

|---|---|---|---|---|---|

| Cores | 16 | 32 | 64 | 80 | 160 |

| Memory | 128 GiB | 256 GiB | 512 GiB | 960 GiB | 1920 GiB |

| Disk Space | 1032 GB | 1032 GB | 1032 GB | 1032 GB | 1032 GB |

| Bandwidth | 5000 Mbps | 10000 Mbps | 20000 Mbps | 15000 Mbps | 30000 Mbps |

In addition to the hosted portion of SAP Analytics Cloud you have the option to download

on-premise applications. Read about the specific SAP support and maintenance

policies that apply to services containing on-premise applications: SAP Note

2658835  .

.

(Main

Menu)

(Main

Menu)

System

System Administration

Administration